Neural Filters from Adobe Photoshop

New functions & features with AI support

The use of artificial intelligence (AI) is already integrated into digital image processing. For the so-called “Neural Filters”, Adobe Photoshop relies on AI support. We have taken a closer look at the features for you.

What are Neural Filters in Adobe Photoshop?

Although Adobe Photoshop is fundamentally not based on AI, the software includes more and more tools based on AI and machine learning from Adobe Sensei. This is also the case with the Neural Filters. Portraits, landscapes or other images are supposed to be edited faster and more effectively with the help of the Neural Filters and difficult workflows are sometimes reduced to just a few clicks, according to Adobe. For this purpose, context-dependent new pixels that are not present in the original image are added.

The Neural Filters were already introduced in October 2020 and since then Adobe has been steadily expanding them. The filters are suitable for all kinds of tasks, from removing acne to colouring black and white photos or changing a person’s facial expression. With AI support, the original image is automatically processed accordingly depending on the filter selection. For individual filters, processing is supported by cloud processing, which requires an active internet connection.

The neural filters can be accessed via Filter → Neural Filter. The filters in the selection of Features Neural Filters are already officially released and fully functional. Currently available here are:

– Smart Portrait helps to creatively adjust portraits, e.g. expression, hair, age or pose.

– Skin Smoothing works with automatic face detection and removes acne and blemishes.

– Super Zoom compensates for loss of resolution when increasing the zoom.

– JPEG Artifacts Removal removes artefacts resulting from JPEG compression.

– Colorize adds colour to black and white photos using algorithms.

– Style Transfer applies selected artistic styles to images.

– Makeup Transfer takes the makeup style of a reference image and applies it to the eye and mouth area of the original image.

Furthermore, there are a number of Beta Filters among the neural filters, which are currently still in the test phase and are being further developed. These currently include:

– Photo Restoration aims to remove scratches and to improve contrast and other elements in old photos.

– Harmonization tries to match the colour and tone of one layer to another to achieve a flawless composition.

– Landscape Mixer combines two images to change characteristics such as season and time of day.

– Depth Blur adds haze between the foreground and background to create an environmental depth.

– Color Transfer attempts to transfer the colour palette of a reference image to the original image.

In addition, there is the so-called Wait List, which gives a foretaste of the neural filters from Adobe Photoshop that are planned for future versions.

The Neural Filter “Landscape Mixer” in a practical test

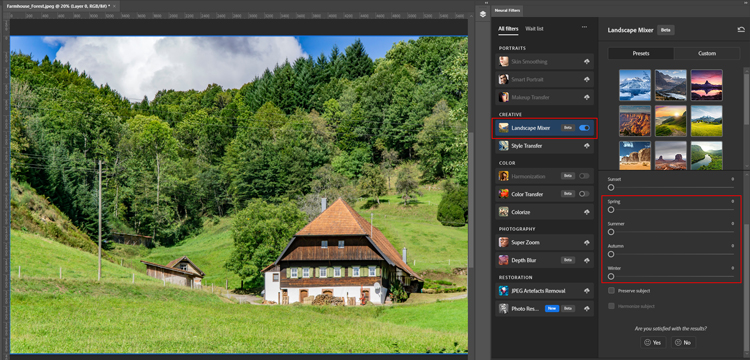

We have taken a closer look at the beta filter “Landscape Mixer”. With just a few clicks, Adobe promises, this neural filter can be used to change the season or even the time of day in a landscape scene.

For this purpose we need two images: The original image that is to be changed by the filter and a reference image whose style is applied to the original image using AI support. Photoshop provides some preview images as reference images or you can upload your own image.

The filter automatically adjusts the brightness and colour of the original image during processing. In addition, it also adjusts other elements such as blossoms of flowers or leaves and needles of trees. The representation of the sky is also changed by the filter. The applied effects can then be individually adjusted using sliders.

Here is our original image that we processed with the neural filter “Landscape Mixer”.

This is our original picture in combination with an autumn photo.

And here is our image in combination with a winter scene.

Our conclusion

We are, admittedly, surprised. The results are quite realistic and better than we would have thought. However, we notice some flaws.

Complex image elements in particular are often blurred or even altered after automatic processing. For example, the house in our example image has changed or power poles have been converted into tree trunks. To avoid this, individual elements must first be masked. This way, they can be excluded from the automatic processing and can either be reworked manually or integrated unprocessed. Without manual adjustment, the results also sometimes look a little too deliberate, which in turn quickly makes the editing as a whole obvious to the viewer. Overall, the results are quite appealing, but there is still potential for improvement; after all, the filter is still in beta status.

If you just want to quickly convert a photo into a different season or time of day and can overlook minor (or even major) irregularities, this Neural Filter is quite sufficient. What would normally require very advanced image editing skills and several hours of work, the Neural Filter “Landscape Blender” has done with just a few clicks.

However, the fast results of the neural filters can tempt users to overdo it when editing an image (after all, it’s so easy) and then it quickly becomes unrealistic. Despite the latest technology, a careful eye and a sure instinct are still required to ensure that it is not recognisable that Photoshop has been used.

Adobe Photoshop’s Neural Filters are an interesting and in many cases helpful addition. However, high-quality results with perfect details still require manual processing and skill.

- 2023

- January (1)

- 2022

- December (2)

- November (1)

- October (2)

- September (2)

- August (1)

- July (1)

- June (1)

- May (1)

- April (1)

- March (1)

- February (1)

- January (3)

- 2021

- December (2)

- November (1)

- October (3)

- September (2)

- August (1)

- July (3)

- June (1)

- May (2)

- April (1)

- March (1)

- February (2)

- January (4)

- 2020

- December (2)

- November (3)

- October (4)

- September (1)

- August (2)

- July (1)

- June (2)

- May (3)

- April (3)

- March (3)

- February (4)

- January (4)

- 2019

- December (1)

- November (2)

- October (5)

- September (1)

- August (3)

- July (2)

- June (2)

- May (3)

- April (2)

- March (3)

- February (2)

- January (4)

- 2018

- December (2)

- November (2)

- October (3)

- September (3)

- August (2)

- July (2)

- June (2)

- May (1)

- April (1)

- March (2)

- February (3)

- January (2)

- 2017

- December (2)

- November (2)

- October (1)

- September (1)

- August (1)

- July (1)

- June (1)

- May (1)

- April (1)

- March (1)

- February (1)